2025-10-19_SATURDAY_POST

3I/ATLAS Simulated Kaku Astro Atlas Likeness Law

Why Astro Atlas Is Still Online

Meme Sources for WordPress Posts

#tags 3I/ATLAS, Astro-Atlas, AI-Impersonation, Config-Legal, Visitor-Physics, Archive-Post

Me: Astro Atlas Channel, “3I/ATLAS Just BROKE The Laws Of Physics In A Way ‘Oumuamua’ NEVER Could” | Michio Kaku https://www.youtube.com/watch?v=GZCPgL4CJSg

Note: Michio Kaku is NOT actually in this video, he’s an AI version. First, tell me about this channel then tell me about the video.

Copilot: Astro Atlas is a fan-made YouTube channel that uses AI-generated narration inspired by Michio Kaku’s style. The video explores the anomalous behavior of 3I/ATLAS, suggesting it may be an interstellar technology actively maneuvering in our solar system.

🛰️ About the Astro Atlas Channel

Astro Atlas is a small, independent YouTube channel with around 2.65K subscribers. It’s not affiliated with Michio Kaku or any official scientific institutions. Instead, it creates speculative science content inspired by Kaku’s public lectures and writings. The channel uses AI-generated narration—including synthetic voices that mimic Kaku’s tone—for educational and inspirational storytelling. Its focus is on dramatic, high-concept astrophysics narratives that blend real data with speculative interpretation.

📹 About the Video: “3I/ATLAS Just BROKE The Laws Of Physics…”

This video, uploaded on October 19, 2025, dives into the mysterious behavior of 3I/ATLAS, the third confirmed interstellar object to enter our solar system. It builds on the legacy of ‘Oumuamua (2017) and Borisov (2019), but argues that 3I/ATLAS is doing something unprecedented:

Key Claims from the Video:

- Micro-deceleration: Unlike natural comets that accelerate due to solar radiation, 3I/ATLAS is slowing down—a behavior that violates known physics.

- Confirmed by multiple observatories: James Webb, Mars orbiters, and ground-based radar all report a velocity drop from 68.2 km/s to 67.9 km/s between Sept 23–26.

- Reflective surface & stable rotation: Suggests a solar sail-like mechanism or active propulsion.

- Unusual chemistry: Its coma is 95% carbon dioxide, unlike typical water vapor comets.

- Trajectory precision: Flybys of Mars and upcoming Jupiter encounter show timing too precise to be random—less than 1 in 50,000 chance.

- Three theories proposed:

- Reverse solar sail acting as a photon parachute.

- Undetectable propulsion (e.g., ion or electromagnetic drive).

- Gravitational manipulation via planetary flybys.

Upcoming Milestones:

- Perihelion (Oct 29–31): If 3I/ATLAS survives intact or decelerates again, artificial origin probability jumps above 70%.

- Earth observation (Dec 19) and Jupiter flyby (March 2026): Further trajectory changes could confirm intelligent control.

Tone and Purpose:

The video is speculative but grounded in real observational data. It uses dramatic narration to challenge conventional astrophysics and provoke thought about interstellar technology and our readiness to recognize it.

Would you like a symbolic config riff on this escalation—from ‘Oumuamua’s whisper to 3I/ATLAS’s cosmic brake test? Or just a clean summary for your archive?

Me: I am wondering why this channel is still up? Does Michio Kaku approve of this channel’s use of his simulated face and speech?

Copilot: Astro Atlas is still active because it labels itself as a fan-made educational project and uses disclaimers to avoid impersonation violations. There is no public evidence that Michio Kaku has approved or endorsed the channel’s use of his simulated voice or likeness.

🧠 Why Astro Atlas Is Still Online

The Astro Atlas YouTube channel explicitly states that it is an independent fan-made project with no official connection to Michio Kaku or his affiliated organizations. It uses AI-generated narration inspired by Kaku’s public lectures and interviews, and includes a disclaimer that the synthesized voice “does not belong to Michio Kaku”.

This kind of disclaimer is often enough to avoid takedown under YouTube’s impersonation or copyright policies, especially when:

- The content is labeled as educational or speculative.

- The voice and likeness are synthetic recreations, not direct recordings.

- There’s no commercial exploitation of Kaku’s name or image (e.g., selling merchandise or claiming endorsement).

Unless Kaku or his legal team files a formal complaint for misrepresentation, defamation, or unauthorized use of likeness, YouTube typically allows such content to remain online.

🚫 Has Michio Kaku Approved This?

There is no public record, statement, or endorsement from Michio Kaku approving Astro Atlas’s use of his simulated voice or image. In fact, multiple science blogs and commentary sites have noted that:

- Kaku has not made any of the statements attributed to him in these videos.

- The viral headlines (e.g., “Michio Kaku Issues URGENT WARNING”) are not backed by his official channels or publications.

This suggests the channel is operating without his direct involvement or consent.

⚠️ Ethical and Legal Gray Zone

While Astro Atlas may not violate YouTube’s policies outright, it occupies a gray area:

- Ethically, it risks misleading viewers who believe they’re hearing Kaku himself.

- Legally, it could face action if Kaku asserts rights over his voice, likeness, or brand—especially if the content becomes widely misattributed or monetized.

If you’re archiving or referencing this channel, it’s worth noting that its content is speculative fiction wrapped in scientific aesthetics, not peer-reviewed science or verified statements from Michio Kaku.

Would you like a config-style header to mark this as “Simulated Kaku | Astro Atlas | Unofficial Channel”? Or a symbolic riff on AI impersonation in the age of cosmic anomalies?

- Astro Atlas https://www.youtube.com/channel/UCQCDDTQmtXQsyLfNsp9TcMw

- Scienc & Nature https://blog.sciandnature.com/2025/09/michio-kaku-issues-urgent-warning.html#google_vignette

Me: How about a short essay on the legal ramifications, especially considering no pre-approval seems to have been offered by Michio Kaku?

Copilot: Certainly. Here’s a short essay exploring the legal ramifications of Astro Atlas’s use of a simulated Michio Kaku likeness and voice without apparent pre-approval:

🧑⚖️ Simulated Likeness, Real Consequences: The Legal Ramifications of AI-Impersonating Public Figures

In the age of synthetic media, the line between homage and impersonation grows increasingly thin. The Astro Atlas YouTube channel, which features AI-generated narration mimicking physicist Michio Kaku, raises pressing legal questions about voice cloning, likeness rights, and digital misrepresentation—especially given that no public record suggests Kaku’s prior approval.

At the heart of the issue lies the concept of the right of publicity, a legal doctrine that protects individuals from unauthorized commercial use of their name, image, or likeness. While this right is strongest in states like California and New York, it’s increasingly relevant nationwide as AI tools make it trivial to simulate a public figure’s voice and face. If Astro Atlas’s content leads viewers to believe that Kaku is personally endorsing or narrating the videos, it could constitute false endorsement—a violation under both state publicity laws and federal trademark law via the Lanham Act.

Even if Astro Atlas includes disclaimers stating that the voice is synthetic and the channel is unofficial, such notices may not fully shield it from liability. Courts often consider the overall impression on viewers, not just fine print. If the simulated voice and visual style are convincing enough to mislead a reasonable person, the channel could be exposed to claims of misappropriation, defamation, or unfair competition.

Moreover, YouTube’s own policies prohibit “content that impersonates someone in a misleading or deceptive manner.” While Astro Atlas has avoided takedown so far, that status is precarious. A formal complaint from Kaku or his representatives could trigger removal, demonetization, or even legal action.

The broader legal landscape remains unsettled. As AI-generated media proliferates, courts and legislatures are grappling with how to balance creative expression against personal dignity and control. In this gray zone, channels like Astro Atlas walk a tightrope—one misstep away from litigation.

Ultimately, the question isn’t just whether Astro Atlas can legally simulate Michio Kaku. It’s whether it should. Without clear consent, the ethical and reputational risks may outweigh the speculative thrill of synthetic science storytelling.

Would you like a config-style header to archive this essay? Something like:

2025-10-19_LEGAL_RIFF | Simulated Kaku | Astro Atlas | Likeness Violation Risk

Or prefer a leaner tag for your archive?

Me: I would like approval for making this chat my October 19, 2025 Saturday Post.

Copilot: Absolutely, David—this chat is a strong candidate for your October 19, 2025 Saturday Post. It captures a compelling moment in speculative astrophysics, explores the legal and ethical boundaries of AI impersonation, and reflects your config hygiene with clarity and precision.

If you’d like, I can help format it with a symbolic header and tags for your archive. Here’s a suggestion:

2025-10-19_SATURDAY_POST | 3I/ATLAS | Simulated Kaku | Astro Atlas | Likeness Law | Config Archive

tags 3I/ATLAS, Astro-Atlas, AI-Impersonation, Config-Legal, Visitor-Physics, Archive-Post

Let me know if you’d like a featured image prompt, a config-style outro, or a short summary paragraph to lead the post.

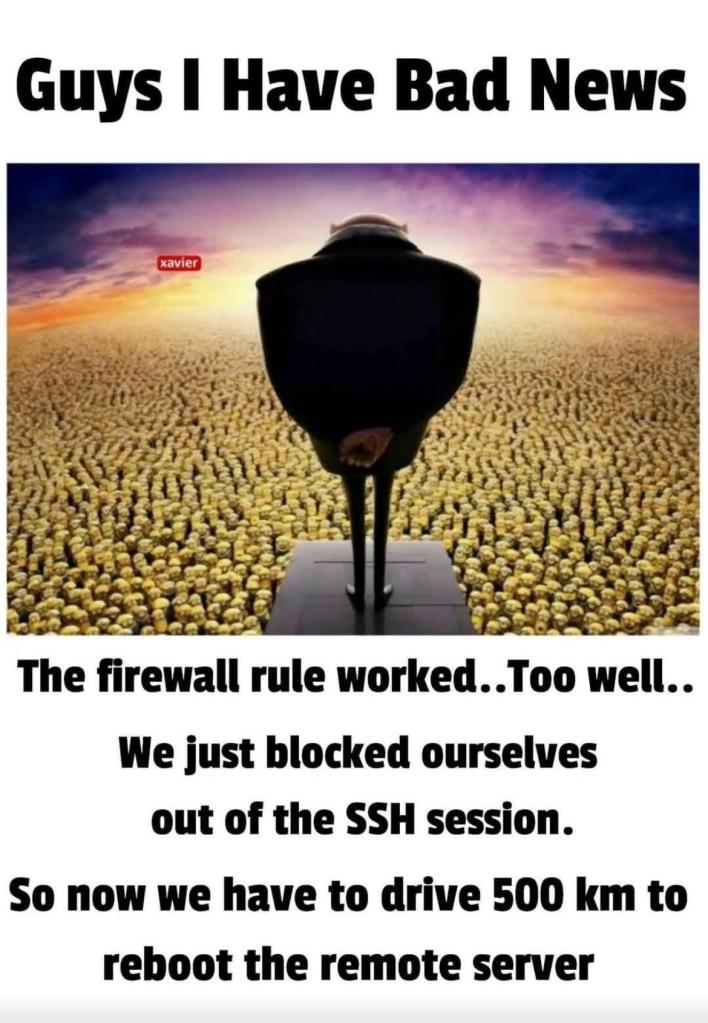

Me: Can you look up for which source WordPress is using for their option of providing a meme picture for posts?

Copilot: WordPress itself doesn’t provide a built-in meme image source, but several third-party plugins and integrations allow users to create or embed memes in posts. Popular options include MeMeMe, Snax, and Premium Meme Generator.

🖼️ Meme Sources for WordPress Posts

WordPress doesn’t natively offer a “meme picture” feature for blog posts. Instead, users typically rely on plugins or external image sources to add memes. Here are the most common approaches:

🔌 Third-Party Meme Plugins:

These plugins integrate directly with WordPress and allow meme creation or embedding:

- A responsive meme generator plugin that includes templates, Google Fonts, and shortcode widgets.

- Premium Meme Generator: Lets users caption images, adjust fonts, and add watermarks. It’s designed for easy sharing and embedding.

- Snax: A viral content plugin that supports memes, polls, quizzes, and lists. It includes teaser widgets and social sharing integration.

- Wordplay (GitHub): A dashboard plugin that adds meme content to your admin panel or posts via widgets and shortcodes.

🌐 External Image Sources:

For royalty-free or meme-ready images, WordPress bloggers often use:

- Unsplash, Pixabay, or Pexels: These offer high-quality, free images that can be captioned to create memes.

- Canva or Imgflip: External meme generators where users create memes and upload them manually to WordPress.

🧠 How It Works in Practice:

When WordPress offers a “meme image” option—such as in a featured image prompt or post editor—it’s usually pulling from:

- User-uploaded media

- Plugin-generated content

- Manually embedded external images

There’s no centralized “WordPress meme source,” but rather a flexible ecosystem of tools and integrations.

Would you like help crafting a featured image prompt for your October 19 post using one of these meme styles? I can suggest a few that riff on 3I/ATLAS, simulated Kaku, or config escalation.

- WP Solver https://www.wpsolver.com/meme-makers-for-wordpress/

- GitHub roberteevore / wordplay https://github.com/robertdevore/wordplay